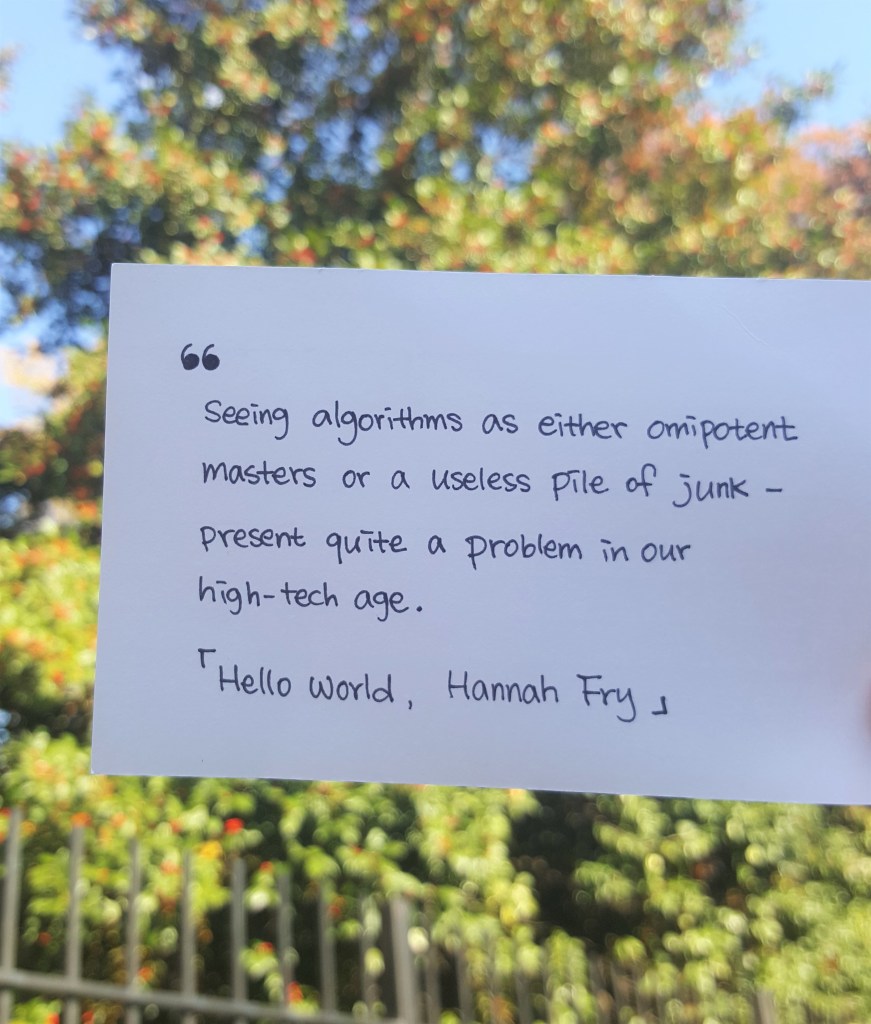

“There’s a hidden danger in building an automated system that can safely handle virtually every issue its designers can anticipate. (…) So they’ll have very little experience to draw on to meet the challenge of an unanticipated emergency.”

[Hello World: Being Human in the Age of Algorithm, Hannah Fry]

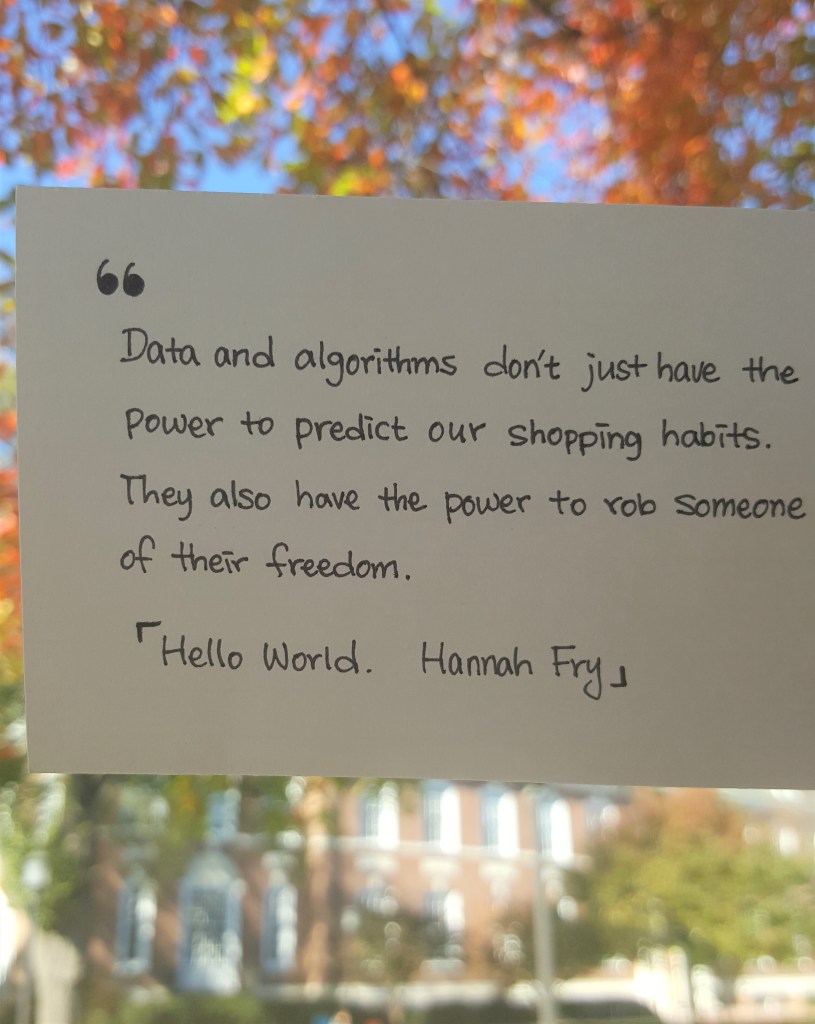

Using Google Maps, I was driving to Quebec in Canada from my home (in the U.S.) for late summer vacation with my family. Just after passing the border, I realized that my phone did not work and of course Google Maps lost their power, too. I made a desperate attempt to drive with only road signs as my dad did. Our world is fast becoming intelligent via recent developments in smart devices, algorithms, automated systems, and AI. We don’t need to remember our friends’ phone numbers and physical addresses anymore. Moreover, we don’t need to memorize the exact spelling of the longer word; Google search can show the correct results from the misspelling. Can we say that we (not the world) are becoming intelligent?

Large autonomous systems will be widespread inevitably. For example, autonomous cars will be popular in the near future. So, the next generation may not know (or experience) how to correct a slide on an icy road. This lack of experience may lead to a nasty accident when the autonomous system is not working. Technologies do more, we do less (e.g. thinking or experience). However, there are two sides to every story. Since the invention of the calculator (or the computer), we have developed new research fields such as numerical analysis, scientific computing, or computational biology, resulting in the enormous expansion of knowledge. I hope that the advent of the large autonomous system provides not only the answer to problems we are facing now but also the vision for the better future.