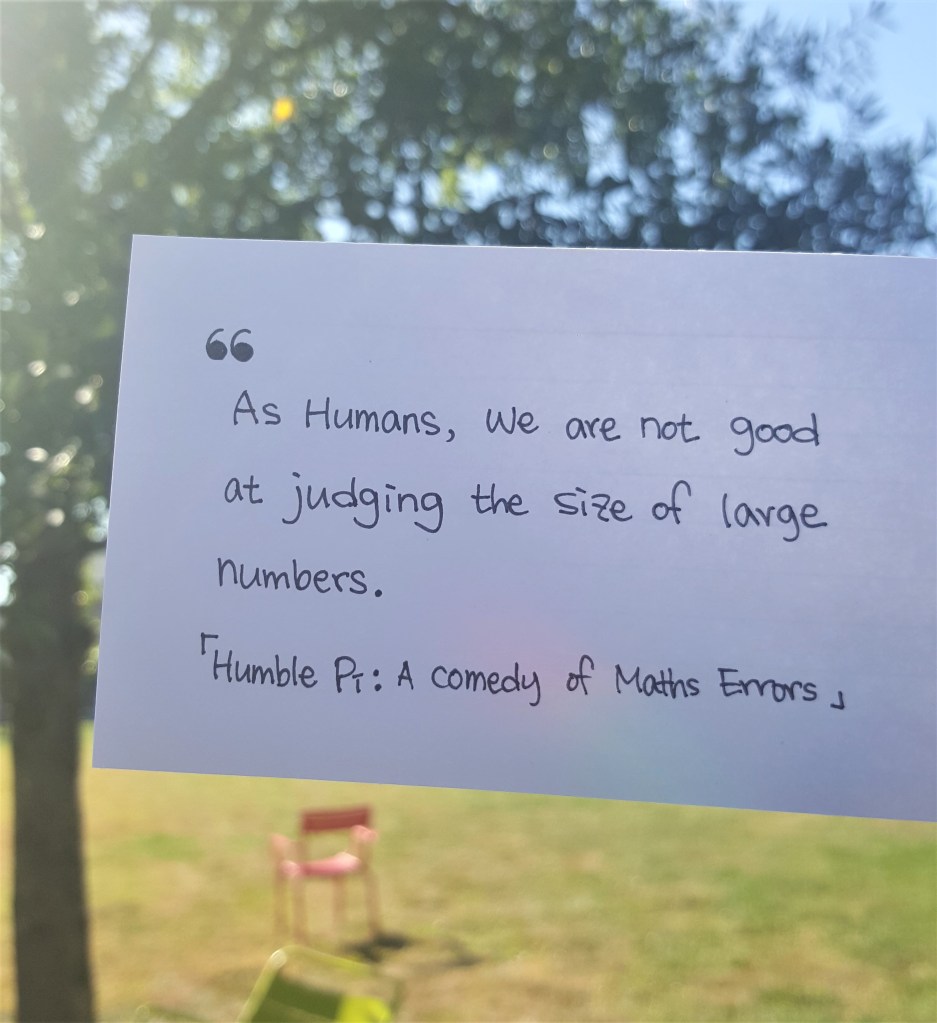

“As humans, we are not good at judging the size of large numbers. And even when we know one is bigger than another, we don’t appreciate the size of the difference.”

[Humble Pi: A Comedy of Maths Errors, Matt Parker]

In the Stone Age, a hundred might be a sufficient number to count a herd of deer for hunting or to count gathered nuts. In the early (and mid) 20th century, a million is enough to call the rich people ‘Millionaire’ but now it is too small to count Mark Zuckerberg’s net worth (a million is still BIG money for me by-the-way). In the age of Big Data, what number is a really big number? In the 1980s, Bill Gates, the pioneer to usher in the computer age, said: “for computer memory, 640K ought to be enough for anybody.” Nobody can predict the big number correctly and this is human nature.

However, we need to estimate a certain big number for a data-driven model, for our business, or for our blogs. After unveiling a smartphone, data acquisition speed is now super fast, leading to the age of AI and Big Data. Nowadays, when we make a model, we consider its own capacity to deal with tremendous data (beyond a trillion). The recent introduction of the Internet of Things (IoT) and the autonomous vehicle will generate countless data every second. Then, we need to keep thinking about the big number again and again. That is why I am preparing for the first event for the Billionth visitor to my blog. Do you think this number is still small? It depends on your action. please visit my blog more!